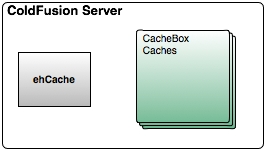

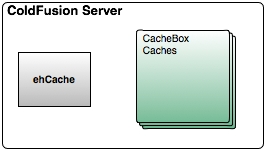

A single instance cache is an in-process cache that can be used within the same JVM heap as your application. In the ColdFusion world, this cache topology is what ColdFusion 9 actually offers: An instance of ehCache that spawns the entire JVM of the running ColdFusion server. There are pros and cons to each topology and you must evaluate the risks and benefits involved with each approach.

Definitely having a single instance approach is the easiest to setup and use. However, if you need to add more ColdFusion instances or you need to cluster your system, single instance will not be of much use anymore as servers will not be synchronized with the same cached data. CacheBox also allows you to create as many instances of itself as you need and also as many CacheBox caches as you need. This allows you great flexibility to create and configure multiple instance caches in a single ColdFusion server instance. This way if you are in shared hosting you do not have to worry about the underlying cache configuration (which is only 1), but you can configure your caching engines for your application ONLY! This is of great benefit and greater flexibility than dealing with a single cache instance on the ColdFusion server.

Fast access to data as it leaves in the same processes

Easy to setup and configure

One easy configuration if you are using ColdFusion 11 or Lucee

You can also have multiple CacheBox caching providers running on one machine (Greater Flexibility)

Shared resources in a single application server

Shared JVM heap size, thus available RAM

Limited scalability

Not fault tolerant

You can also have multiple CacheBox instances running on one machine or have a shared scope instance (Greater Flexibility)

Each of your applications, whether ColdBox or not, can leverage CacheBox and create and configure caches (Greater Flexibility)

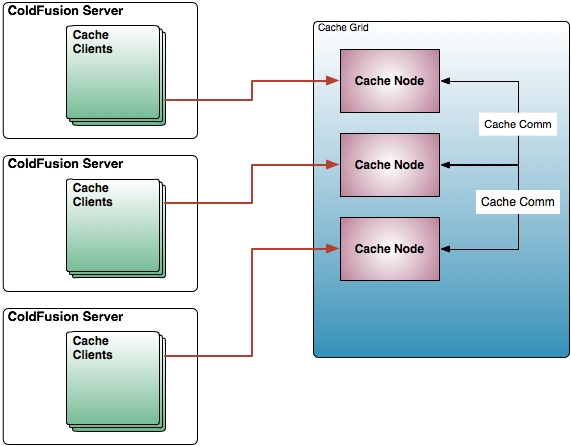

Distributed caching is the big daddy of scalability and extensibility. The crux concept is of partitioning the cache data across the members of the cache cluster and creating a grid of cached data that can scale rather easily. There are several major players out there like EHCache with Terracotta, Oracle Coherence, and our favorite: Couchbase NoSQL.

I suggest looking at all options to find what best suits your requirements. Please note that each vendor has their own flavor of distributed caching and might not match our diagram. Our diagram is just a visual representation for knowledge purposes.

, the makers of CacheBox, have created a commercial extension for the open source CFML engine Lucee to support caching distribution features via Couchbase ().

The Ortus Couchbase Extension is a Lucee Server Extension that allows your server to natively connect to a Couchbase NoSQL Server cluster and leverage it for built-in caching, session/client storage and distribution, and much more. With our extension you will be able to scale and extend your Lucee CFML applications with ease.

The extension will enhance your Lucee server with some of the following :

Store session/client variables in a distributed Couchbase cluster

Get rid of sticky session load balancers, come to the round-robin world!

Session/client variable persistence even after restarts

Ability to leverage the RAM resource virtual file system as a cluster-wide file system

Extreme scalability

Cache data can survive server restarts if one goes down, better redundancy

Better cache availability through redundancy

Higher storage availability

Harder to configure and setup (Maybe, terracotta and ColdFusion 9 is super easy)

Not as much serialization and communication costs

Could need load balancing

Cache connection capablities for providing distributed & highly scalable query, object, template, function caching

Higher flexibility

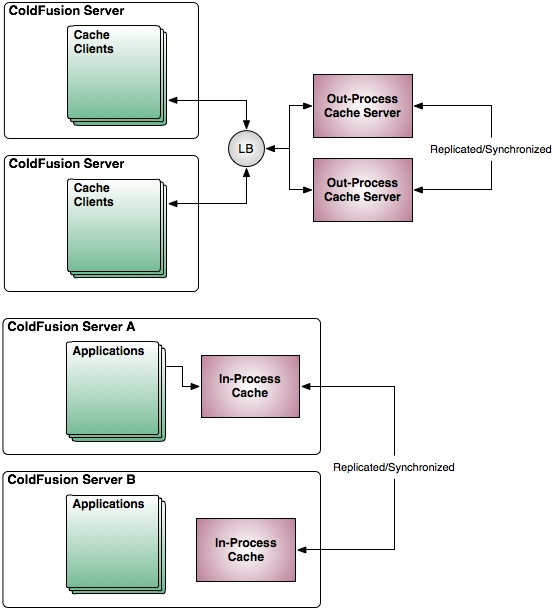

Your storage increases as more cluster members are added

A replicated cache can exist in multiple application server machines and their contents will be replicated, meaning all caches will contain all the data elements. This type of topology is beneficial as it allows for all members in the application cluster to have availability to its elements. However, there are also some drawbacks due to the amount of chatter and synchronization that must exist in order for all data or cache nodes to have the same data fresh. This approach will provide better scalability and redundancy as you are not limited to one single point of failure. This replicated cache can be in-process or out-process depending on the caching engine you are using.

Better scalability

Cache data can survive server restarts if one goes down, better redundancy

Better cache availability through redundancy

Higher storage availability

Ideal for few application servers

A little bit harder to configure and setup

High serialization and network communication costs

Could need load balancing

Can scale on small amounts only

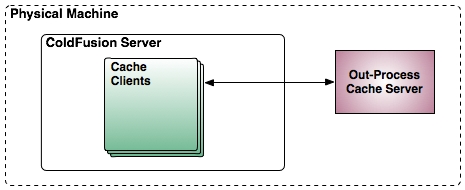

A single instance can be an out-process cache that leaves on its own JVM typically in the same machine as the application server. This approach has also its benefits and cons. A typical example of an out of process cache can be using an instance of CouchDB for storage of your cache components and your applications talk to the cache via REST.

Still access is fast as it is in the same machine

There are several cache topologies that you can benefit from and it all depends on your business requirements and scalability requirements. I will only mention four distinct approaches for caching. CacheBox at this point can support all caching topologies as the underlying caches can support them. However, at this point in time, the CacheBox caching provider only supports a single instance in-process approach.

Easy to setup and configure

Might require a windows or *nix service so the cache engine starts up with the machine

Can leverage its own JVM heap, memory, GC, etc and have more granularities.

Out of process cache servers can be clustered also to provide you with better redundancy. However, once you start clustering them, each of those servers will need a way to replicate and synchronize each other.

Still shares resources in the server

Limited scalability

Needs startup scripts

Needs a client of some sort to be installed in the application server so it can function and a protocol to talk to it: RMI, JMS, SOAP, REST, etc.

Not fault tolerant